View In-Context Analytics in Studio#

In-Context Analytics introduces a new frontend plugin slot that can be used with Aspects, customized to display metrics from other systems.

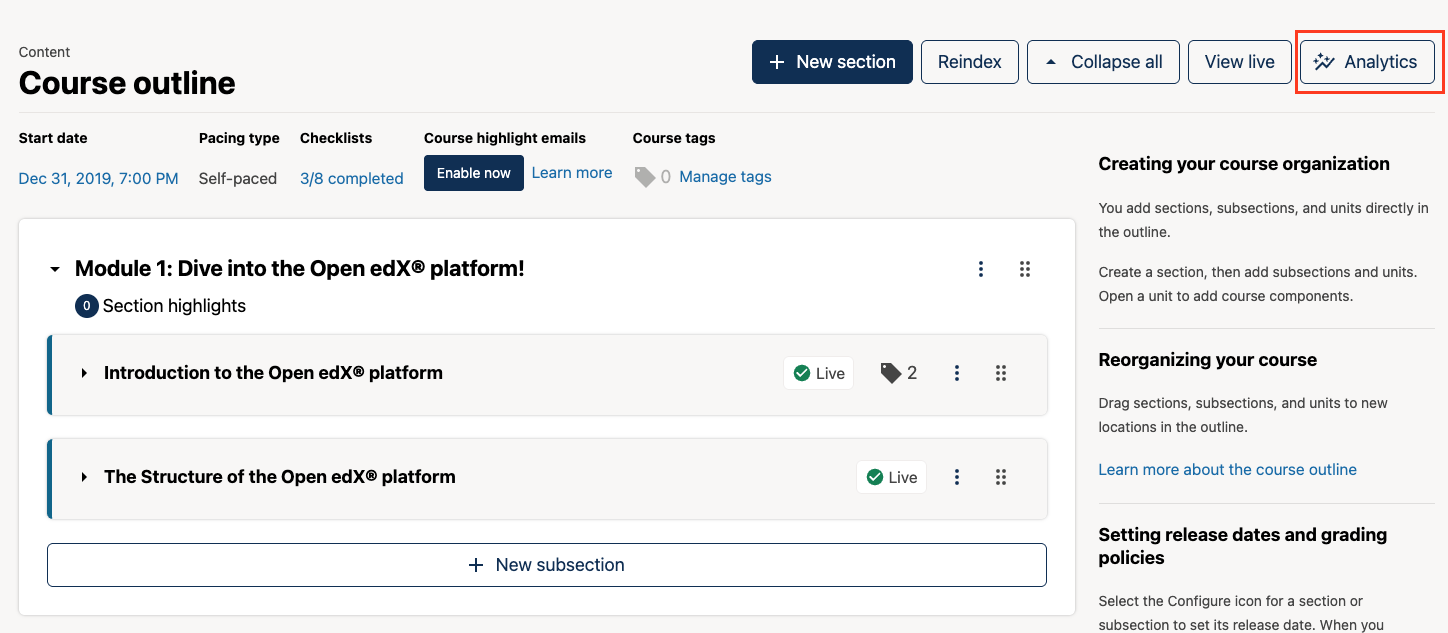

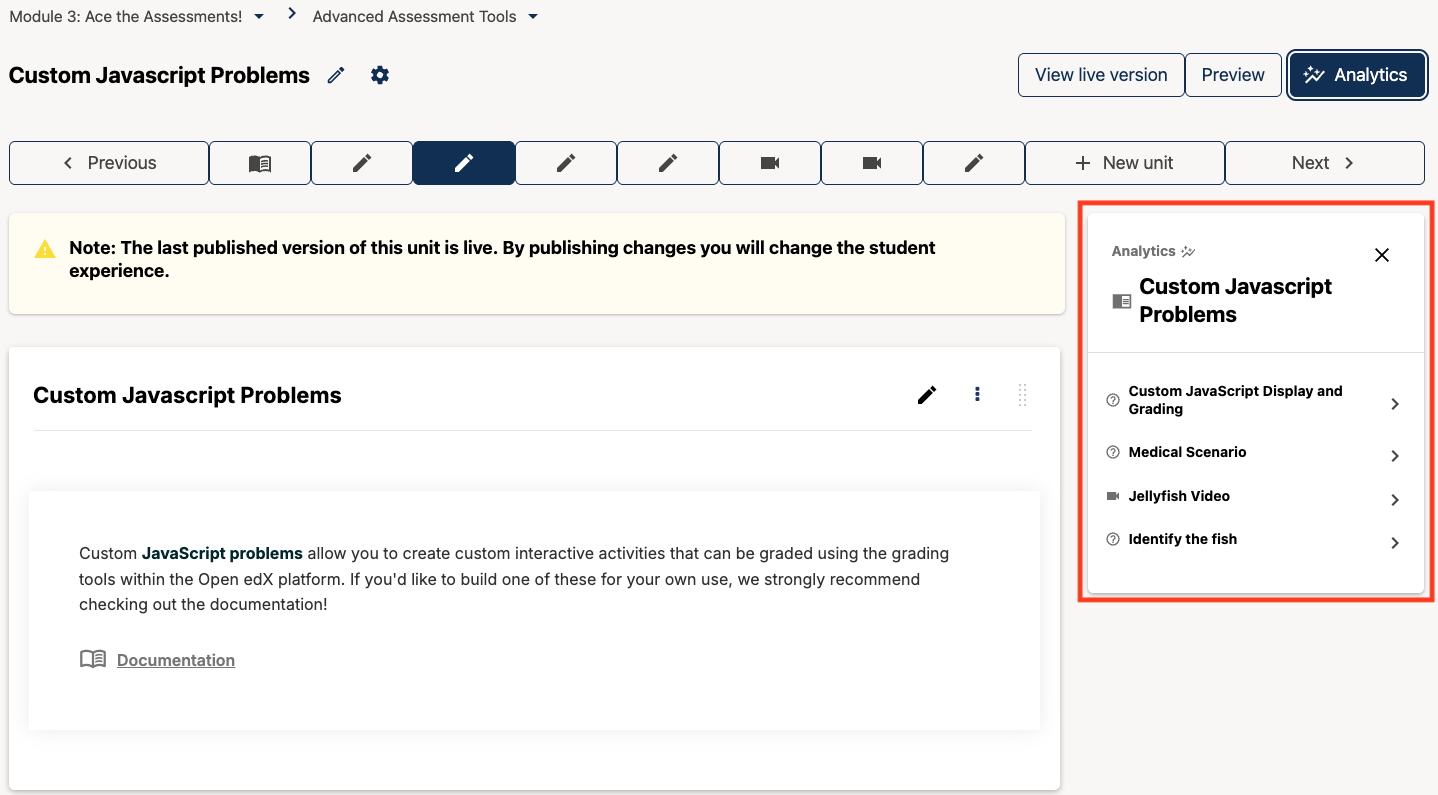

After enabling, users will see a new Analytics button at the top of the page on Course Outline and Unit pages.

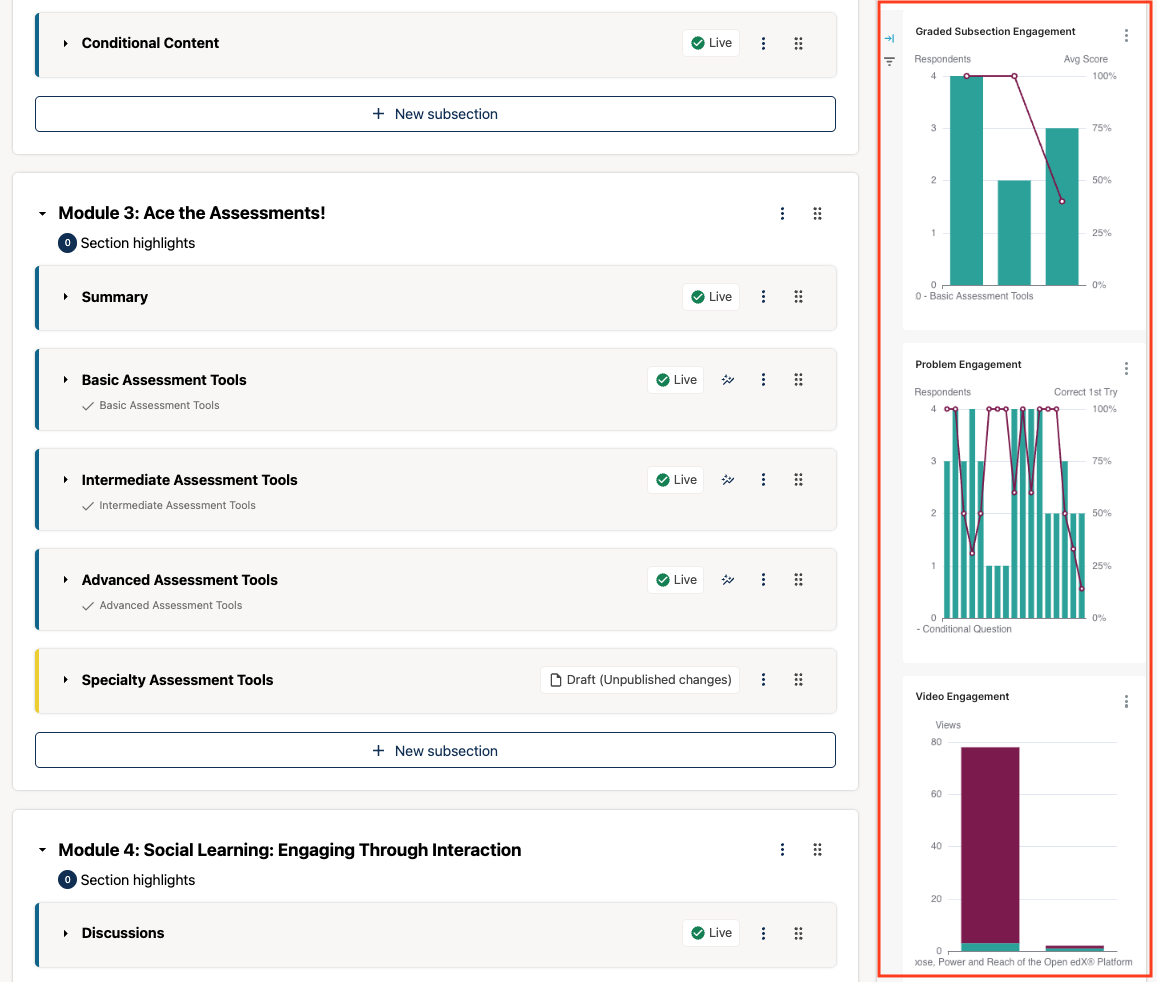

Click the Analytics button to open an easy-to-access, easy-to-collapse sidebar in Studio that displays content engagement and performance data alongside the course outline, problem, and video components.

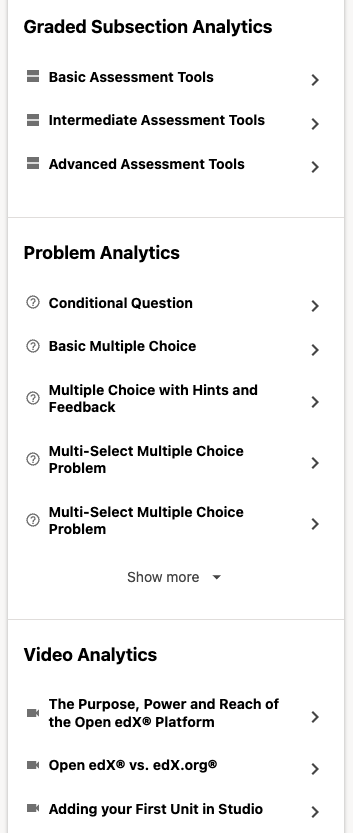

Select the subsection or component of interest (scroll to the menu beneath the engagement charts) to drill in to view more detailed data about a single graded subsection, problem, or video.

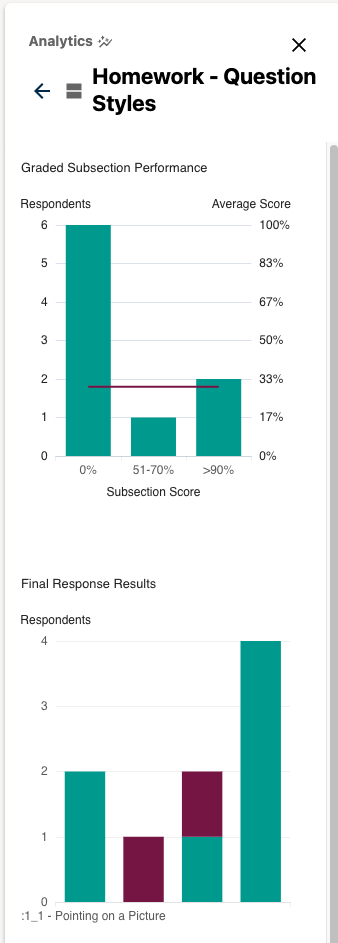

Select a graded subsection component to view the number of respondents in each score range for the graded subsection as well as the average subsection score for all learners who attempted at least one problem in the subsection. The second chart shows the total number of correct and incorrect responses for each problem in the subsection.

Navigate to a specific Unit. In comparison to the analytics provided on the Course Outline page, the In-Context Analytics sidebar on the Unit page provides more granular insight into how learners are engaging with a single course component at a time. From the sidebar, users simply click on the component they want to view more information about to see more detailed data for that component.

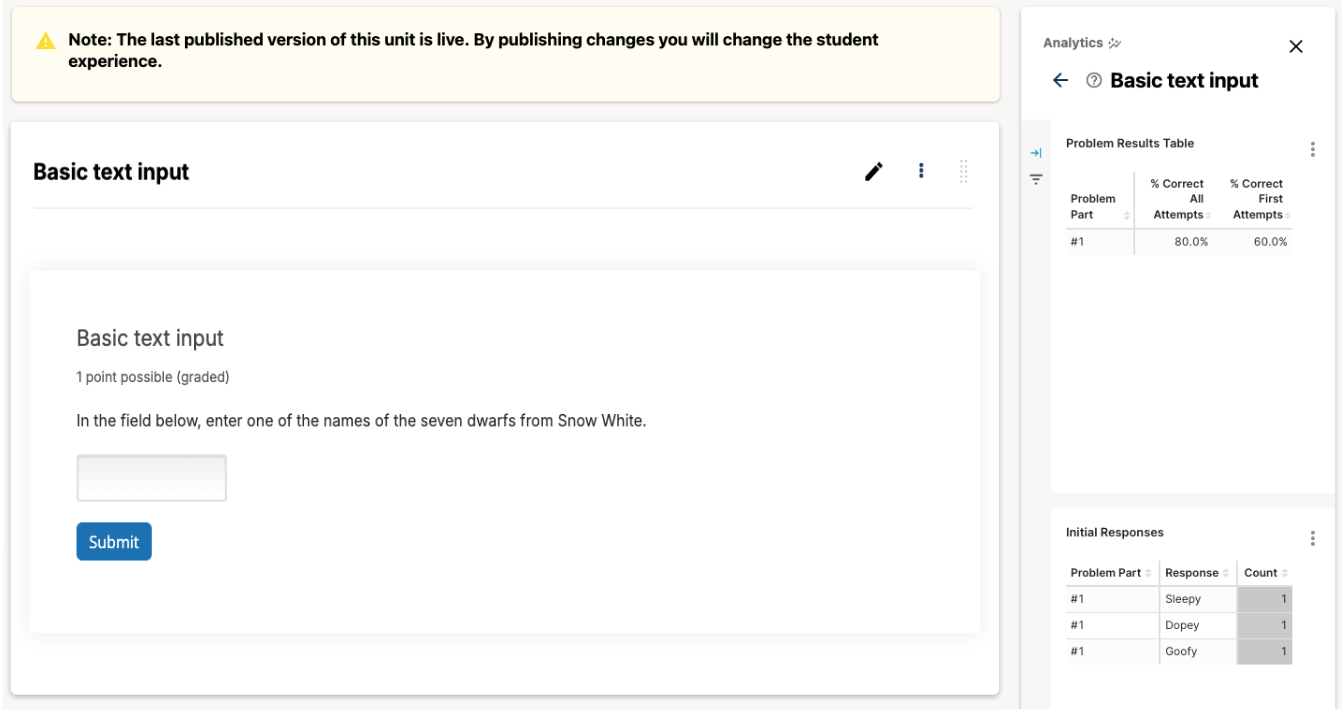

Select a specific problem to scroll the window to that component. The sidebar will show the percentage of correct responses on the first problem attempt and on all problem attempts, and a breakdown of initial responses for each individual problem in the second table.

Note

The percentage of correct problem responses on the first attempt is a good indicator of how difficult the problem is for the learners that submitted a response for the problem, and whether or not a learner immediately understands the question being asked/can identify the correct answer to the question. The percentage correct on all problem attempts is an indicator of how well learners were able to recover from earlier incorrect responses. A higher percentage correct out of all problem attempts indicates that the learner is able to figure out the right answer with additional effort or hints. If this percentage is still low, the problem may be too difficult or confusing for learners.

The second table gives course delivery teams a peak into learners’ thought processes when they approached the problem for the first time. This breakdown can give course authors quick insights into exactly how learners are getting a problem wrong, especially for difficult problems. This data can point to course delivery teams to where learners are getting mixed up.

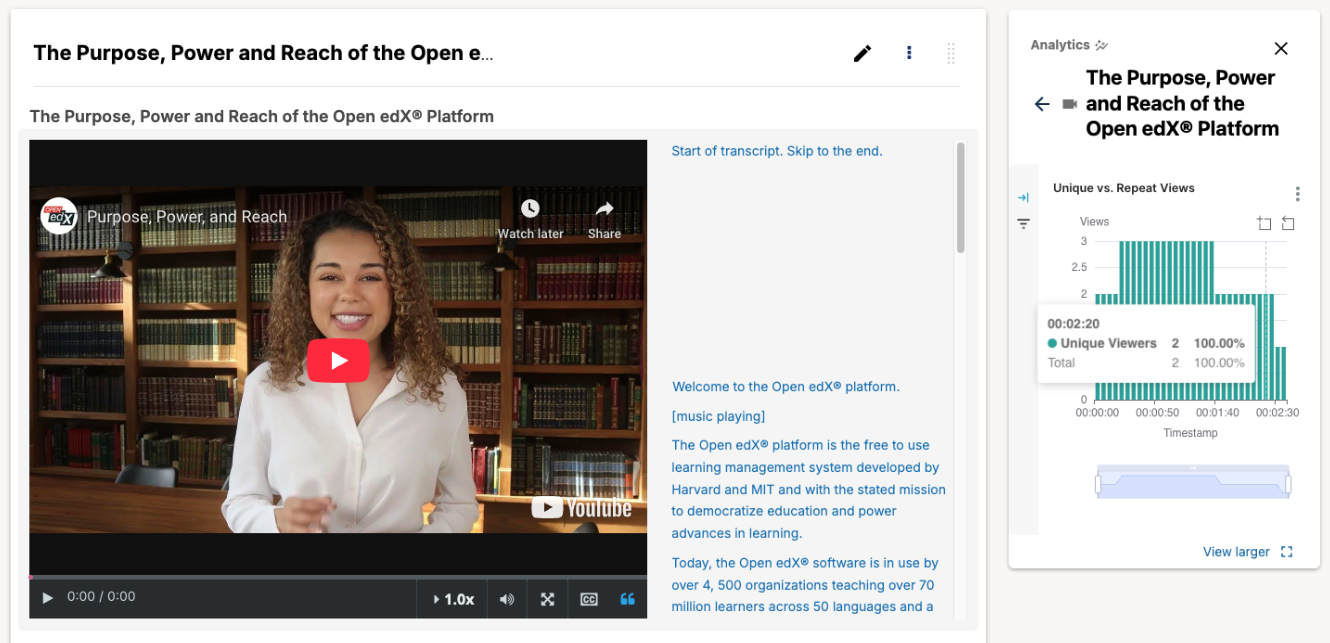

Select a specific video to scroll the window to that component. The sidebar will show the number of unique and repeat views for a single video by timestamp across the duration of the video.

Note

Timestamp ranges with a large number of repeat views should be reviewed as this might be an indicator that this particular section of video is unclear to learners.

See also

Configure Aspects for Production (how to)

In-Context Dashboards (reference)

Introducing In-Context Analytics in Studio (reference)

Maintenance chart

Review Date |

Working Group Reviewer |

Release |

Test situation |

2025-06-06 |

Data WG |

Teak |

Pass |

2025-10-21 |

Data WG |

Ulmo |

Pass |